Crowdstrike - A Reflection

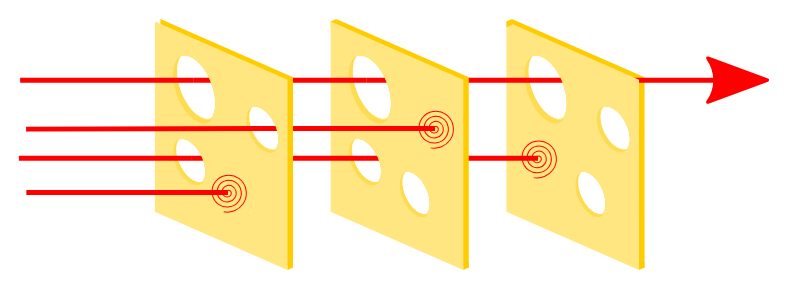

In aviation, there’s a principle known as the “Swiss Cheese” model of accident prevention. This model visualizes the barriers to a severe accident as layers of Swiss cheese, with the holes representing moments when these barriers fail. This model provides the understanding the multiple systemic errors must’ve occured for a negative outcome to be attained. In such a model, we can identify what holes exist in the various layers of the cheese and make them smaller to ensure that issues are less likely.

When failures occur, it’s crucial to conduct an in-depth post mortem to:

- Discover the root cause of the issue

- Uncover systemic errors

- Examine future mitigation strategies

- Learn deeper lessons about complex systems and human interactions within them

Last week, Crowdstrike, a global cybersecurity firm, pushed a faulty kernel update to their worldwide customers, crippling hospital systems, airports, and more. The estimated damage runs into billions of dollars. The root cause was traced to a single line in a configuration file that caused an unrecoverable boot loop. While many teams across the globe are now re-examining their dependencies and making changes to their systems, I want to share a few general lessons I learned from this incident as a university student.

I am writing these lessons down to remind myself why these additional complexities are necessary. Although many of these lessons are already industry standards and experts can provide more detailed explanations, I find that articulating them helps turn abstract thoughts about good practices into concrete ideas and actionable steps.

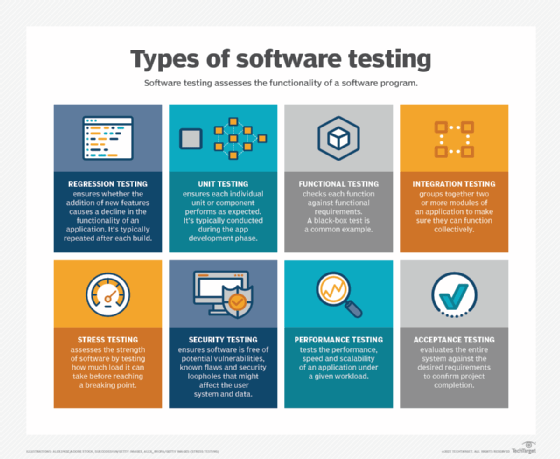

It is pretty obvious that Crowdstrike has made some egregious errors that could lead to an issue of this scale. Introducing automated systems and adhering the guidelines helps mitigate the potential for disaster. One of the most important aspects of any deployment pipeline is testing. Put simply, any sufficiently complex code that isn’t tested should be assumed to be wrong, really wrong, catastrophically wrong. Oftentimes, code coverage is used as a metric to determine the detail of testing. People then falsely assume that 100% code coverage guarantees no bugs. This is not the case at all. Since it is the easiest metric for determining the testing, it also falls victim to Goodhart’s Law, meaning those code coverage statistics decrease in the reliability per percentage of code coverage as people attempt to optimize this statistic. This is not to say that code coverage is not important, but instead to be careful and considerate of what code coverage as a metric represents in terms of reliability.

Thus, it is clear that testing is a very important aspect of proper bug discovery, but if code coverage isn’t a reliable metric, what is the proper way to perform testing on code? What is the industry standard? Well, with no experience in highly scalable or efficient testing systems outside of some small OSS contributions, I can’t really say. However, this article seems to provide some basic standards.

When it comes to unit testing, several best practices are crucial for effective implementation. First, the “Arrange, Act, Assert” (AAA) pattern is fundamental. This involves setting up the necessary context, executing the code under test, and then verifying the results. Using relevant and high-quality test data is essential; it ensures tests are realistic and cover a variety of scenarios, including edge cases and invalid inputs. Another important practice is maintaining a one assert per test method approach to ensure clarity and pinpoint failures accurately. Avoiding test interdependence is critical, as each test should be self-contained to prevent state-related issues when tests are executed in random order. Writing tests before code, known as Test Driven Development (TDD), helps ensure code is testable, meets requirements, and is more maintainable.

Keeping tests short, simple, and visible aids in quick understanding and maintenance. Headless testing, which runs tests without a graphical interface, can be more efficient for non-visual interactions. Testing both positive and negative scenarios ensures robustness, covering expected outcomes and error handling. Mock objects are useful for simulating dependencies and isolating the code being tested, which is vital for reliable and faster testing. Compliance with industry standards should not be overlooked, as it ensures the application meets regulatory requirements and is secure. Ensuring tests are repeatable guarantees reliability, producing the same results consistently. Finally, recognizing cumbersome test setups as a design smell can help improve code modularity and reduce brittleness. Incorporating unit tests into the build process is essential for continuous integration, ensuring that test failures halt progress and encourage immediate fixes. These practices collectively help in catching bugs early, improving code quality, enabling frequent releases, and fostering good programming habits.

Obviously, the level of testing and methodology behind it will vary from organization to organization. NASAs testing requirements are very separate from Google’s which are very separate from a regular startup. But base testing principles are important to consider and implement for almost any software. Testing is a difficult section of development to optimize. Usually, increasing testing means greater developer time, maintenance of the testing software, and compute usage. The cost is recurring while the benefit is a prevention of a negative outcome. Any greater amount of testing, like most others things, suffers from diminishing returns. That makes it highly susceptible to cost cutting from teams attempting to reduce costs and complacency in the systems. The trade-off for testing is some calculation related to the cost of marginal testing versus the prevention of a negative outcome and the severity of that outcome on future business. These things are often difficult to quantify, like customer goodwill and brand perception. As an example, even without the lawsuits and direct customer churn, the brand damage of Crowdstrike will likely have a severe impact on their future earnings and the cost of a robust testing infrastructure would likely have increased their earnings over time. But, obviously, if they didn’t have this issue and continued to get away with poor testing practices, then there wouldn’t be the cost of testing in their business.

While there are more testing practices that Crowdstrike should follow, every organization is different. Engineering is about tradeoffs and sometimes greater risk of failure is okay at the expense of significant testing cost. This is, of course, except when you discuss safety-critical systems. Then, there should be compliance standards that businesses follow, mandated by industry-groups and government institutions to lower the probability of negative events. Obviously, there is a lot of research on the proper way to incorporate safe engineering practices. I’m on a bit of a side-tangent, so let’s continue.

Unit testing and integration testing are important components of a robust software testing strategy, ensuring that individual units of code work correctly and that the system functions as a cohesive whole. However, some software can benefit greatly from fuzz testing, a form of testing designed to identify vulnerabilities by inputting large amounts of random data to the system. Fuzz testing is crucial because it helps uncover edge cases and unexpected behaviors that traditional testing methods might miss. This type of testing can reveal how software handles malformed or unexpected inputs, often exposing security flaws, memory leaks, and crashes. By identifying these weaknesses, fuzz testing helps developers create more resilient and secure software, ultimately leading to a better user experience and reducing the risk of exploitation by malicious actors.

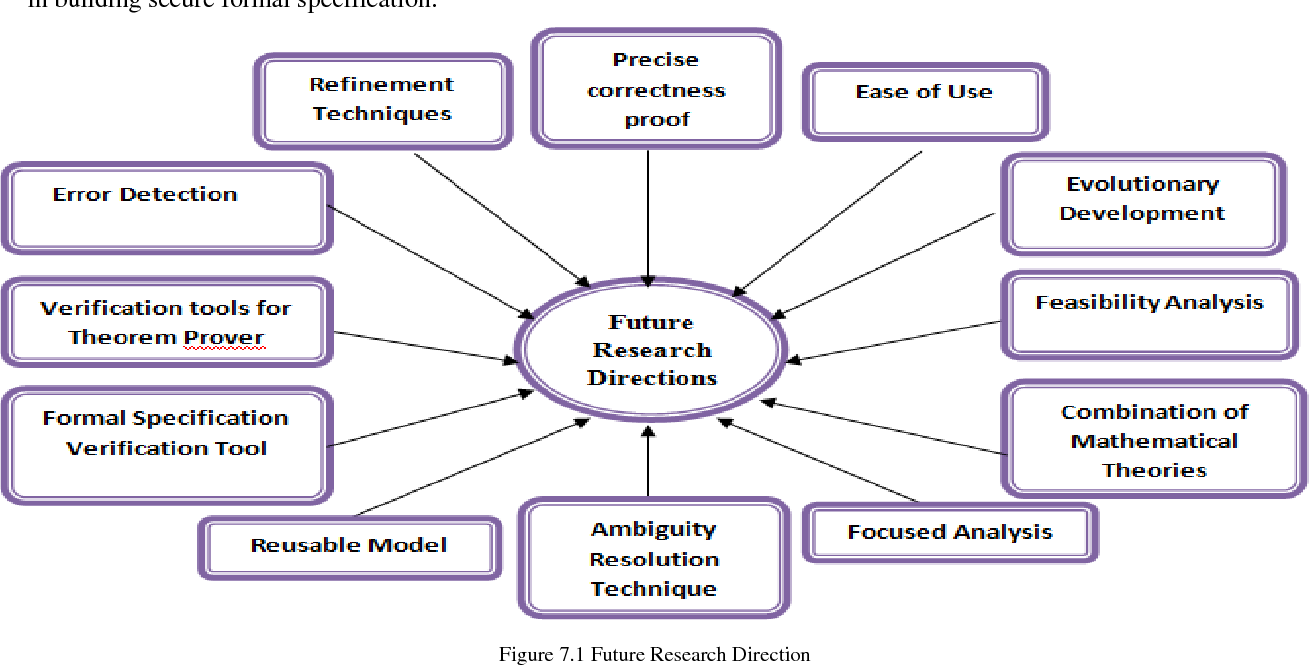

In addition to regular testing, more formal testing may be appropriate depending on the domain. Safety-critical software often uses Formal Verification software to provide a higher level of rigor to the systems. While this level of detail likely is overkill for your standard CRUD app, barriers for using verification are decreasing making it more accessible and reasonable. Part of my research at university is using formal methods to verify operating system modeling and implementation. While much more experienced people have written extensively about this field, with all of its costs and tradeoffs, I do find it an interesting avenue for greater rigor in testing critical infrastructure systems.

When code has passed all the testing and then the code is ready for deployment? Not quite… This is the time to test everything in action before pushing it out into the world. The staging environment is the best time to make sure everything behaves as expected in a real-world scenario without impacting customers. A staging environment is a clone of the production system with similar data.

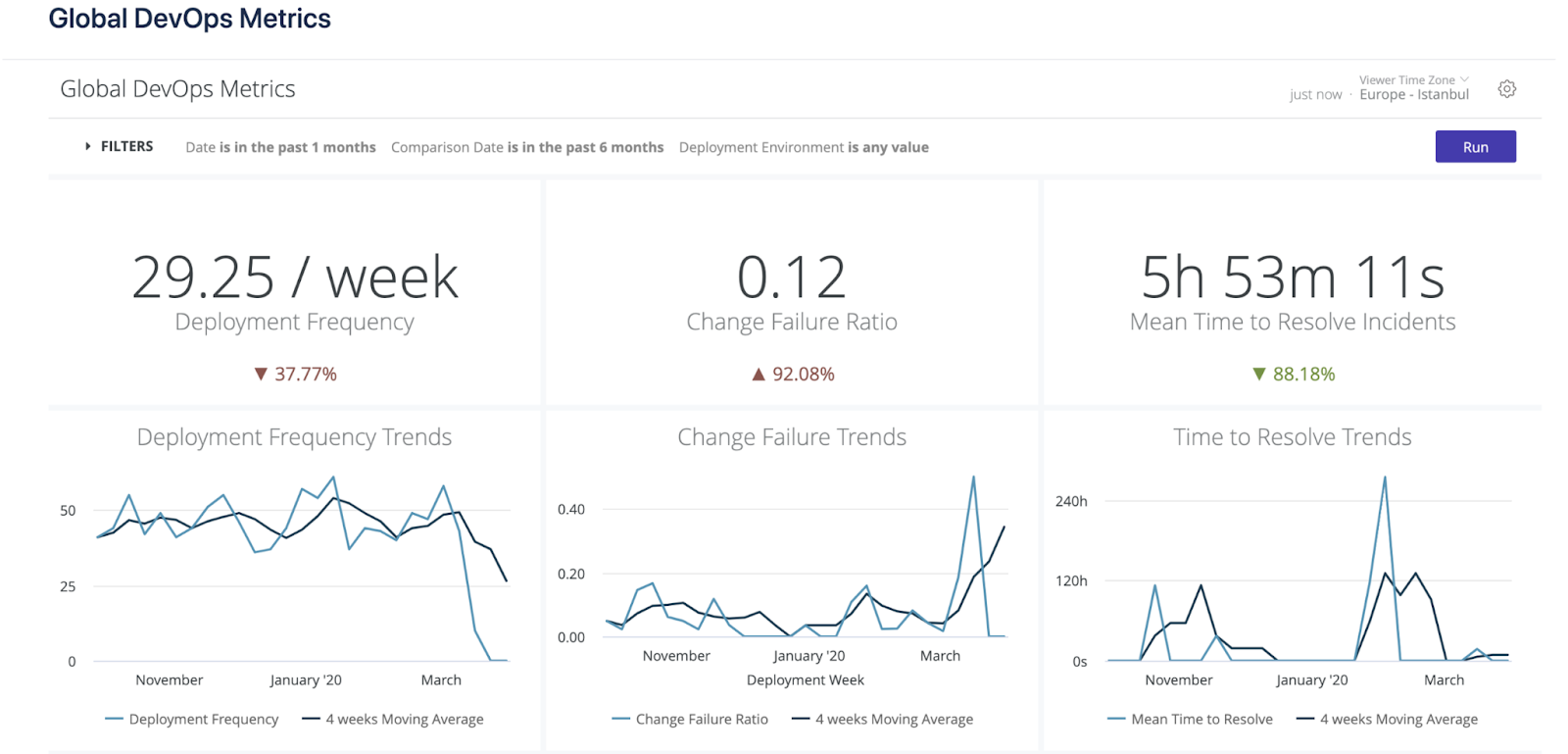

Another standard practice is slowly rolling out deployments and monitoring the status. This means you start rolling out the change to only a handful of customers at first and then slowly adding more and more customers until the entire deployment is completed and successful. During this process, insight into the status of customers is important. Placing status metrics and monitoring them throughout the deployment is key. Alerting systems and automatic rollback (discussed in next section) are additional tools to help in this process. With Crowdstrike being in security, often it’s imperative to roll out changes to customers quickly to protect them from cyber-threats. During critical events such as this, there should be a stronger automated process! This would help in ensuring repeated critical deployments are rolled out quickly while preventing mass-disaster as we have seen. Refining this process may take some time and practice, to find the right balance of speed and reliability, but it can be done through simulated deployments as opposed to doing this refinement process on your customers. Slow rollouts are an easy and effective strategy to reduce the damage of a prospective issue with a deployment.

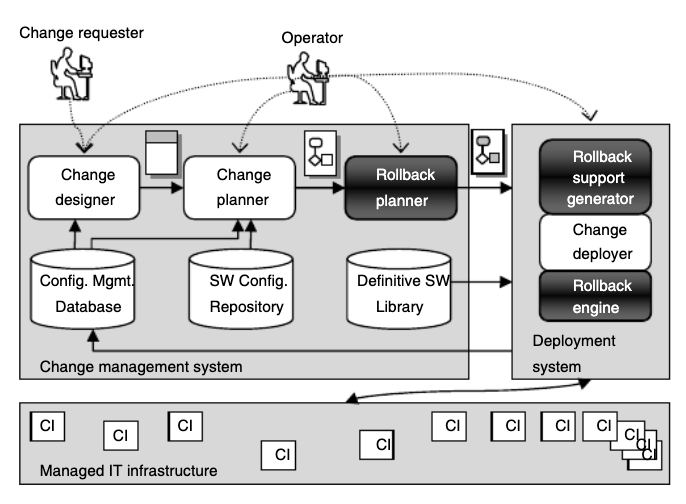

When doing slow rollout, it is smart to include roll back procedures in case of failure. Rollback procedures are fairly self explanatory, but if a system is not behaving as expected during a roll out, one should quickly revert it across all customers and figure out the issue. While in the specific case of Crowdstrike this was not applicable as the update had caused computers to go into a boot loop, it is still an important lesson to reflect on in case of failure. These systems can be automated based on the metric reporting system set up in the roll out system. If some metrics pass a threshold of concern, then a roll back procedure can be initiated.

So to summarize the few key takeaways, proper software deployment can be complex and resource-intensive but there are systems, strategies, and tools that exist to help mitigate deployment issues. First, test the code. It’s crazy one has to say this in 2024 but untested code is crash your server code. Test it! Figure out an easy testing pipeline, figure out what is tested thoroughly and what needs work, potentially setting up quantitative and qualitative metrics to do so. In addition to regular testing, test a deployment through a staging environment and make sure no surprises arise. This ensures, if done well, a highly representative test of what the process of the deployment will be. This doesn’t only help prevent catastrophes, but can provide insight into what the update process will be like on the client side and any minor hiccups you didn’t anticipate coming to fruition. Incremental roll outs with monitoring and roll back procedures are a must. Using automated tooling to assist in this whole process reduces the manpower required and errors causing issues during the deployment process.

But to write about important processes and systems is one thing. It’s another thing to implement it. Thus, I plan to try and implement the processes I described, at a small scale of course, with previous programs I have made.

Enjoy Reading This Article?

Here are some more articles you might like to read next: